The Mechanized God’s Eye — Creating a Clergy?

Imagine descrying machines could make legible the otherwise veiled intimacies of another’s thoughts and propensities — even if this capability existed, would it be moral to condemn or exonerate someone based on a brain scan’s revelations? Could machines predict innocence and guilt? Would such technologies claim “a priestly role” for those wielding them? An emergent domain of computational forensic psychiatry dubbed “neuroprediction” disconcertingly brings once-fantastical concerns of mind-reading to bear in the contemporary world. This article cautions that neuropredictive endeavors implemented in carceral contexts could constitutively foreclose democratic possibility.

The concept of coproduction asserts that scientific products and technologies “embody beliefs not only about how the world is, but also how it ought to be. Natural and social orders, in short, are produced.” This view underpins the argument that neuroprediction in carceral contexts is not in danger of becoming but already is an instantiation of modern phrenology. Such technologies constitute sociotechnical imaginations of possibility, democracy, freedom, discipline, agency, “us,” and “them” in the here and now.

Assumed Ontological Difference

Assumed Ontological Difference

This is Your Brain, This is your Brain on…Crime?

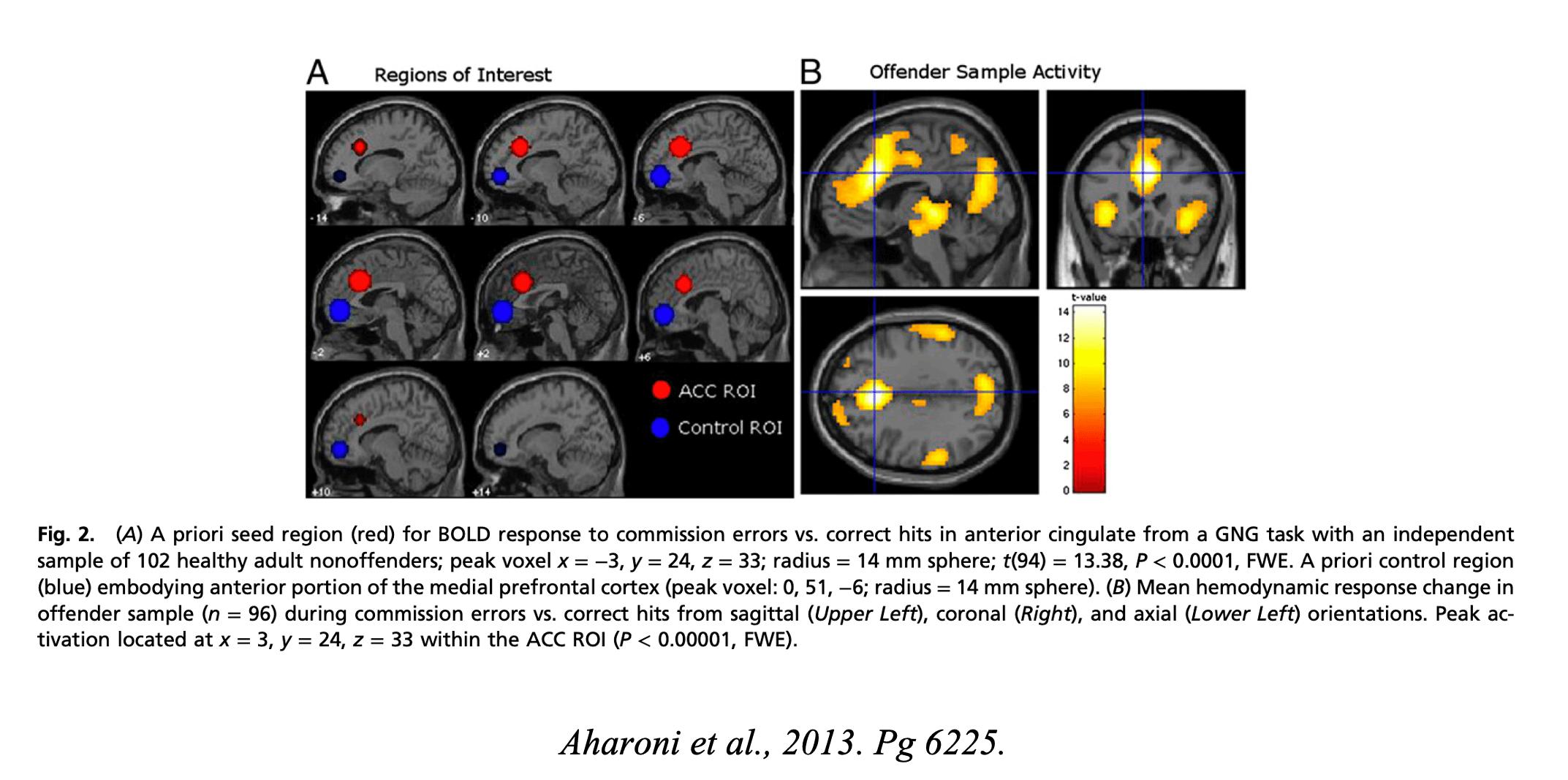

The scientific study that serves as my point of departure uses neuroimaging to “view” blood flow in a brain region it posits as “the anatomical region associated with rearrest,” and splits participants into a fraught binary of “offenders” and “non-offenders” to study the differences in group performance on a simple go/no-go task administered in an MRI machine. Ultimately, the study concludes that its results “suggest a potential neurocognitive biomarker for persistent antisocial behavior.” Sense-making of newly-rendered-visible blood flow in individual brains get (mis)construed as mind-reading. Thus, human futures become known and prophesied via machine, before individuals are free to choose for themselves in the world.

Who Becomes Subject(ed)?

Picturing an “Offender” is No Innocent Task

Explicit racial and socioeconomic disparities in America’s policing and criminal justice systems realize “offender,” “criminal,” “risk” and “arrest” as fraught categories. The United States disproportionately incarcerates low-income people of color; thus, essentializing criminality to create the category of a “criminal brain” must be read in context of the criminal justice system’s racial and class disparities. From the outset, distinguishing “offender” from “nonoffender” brains to research the assumed neurological differences of these two constructed categories reifies problematic binaries of offender/nonoffender, innocent/guilty, good/evil. Envisioning peoples’ brains (which neuroprediction equates to mind, identity, and predilection) through computerized eyes is messy, fraught, human work — complex machinery and artificial intelligence fail to absolve neuroimaging of a necessarily limited, perspectival situatedness. The automated view is not that of a God’s eye, but rather, a cyborgian vision — both mechanized and humanly fallible.

Technology that presupposes an ontological difference between “offenders” and other persons (ie. that people commit crimes because there is something wrong with their brains) is flawed by design. Dangers of claims to “read” minds are un-isolable from motivations to “(re)write” defective consciousnesses. Neuroprediction use in sentencing and parole decisions denies incarcerated people entry to categories of goodness, worthiness, and full personhood excising them from the body politic and by casting them as “defects,” and “risks.” At their most basic level, carceral neuroprediction projects produce knowing machines — human-wielded technologies that threaten to reduce entire people to knowable and known subjects, thereby sanctioning machine-based determinism, and foreclosing democratic possibility.

Guilty Upon Arrest — The Justice Machine & Flimsy Correlation

In failing to distinguish between arrest and conviction of a crime, this study presumes formerly incarcerated persons who are rearrested following their release from prison to be guilty of “recurrent antisocial behavior.” Amalgamating arrest and “guilt” misses the fundamental dynamic that arrest is something being done to the arrested party, rather than a behavior executed by the arrested party. Arrest does not guarantee conviction, and conviction does not guarantee objective guilt. This mischaracterization of policing and the criminal justice system fails to recognize arrest as merely the event that (ideally) initiates due process; rather, those process of determination rely upon fallible, human-centric modes of knowing and sense-making. Obviously, not every instance of illegal behavior precipitates arrest.

Neuroimaging-focused modes of sense-making and envisioning the world “could become a sort of modern phrenology, by discriminating between people based on what their brain looks like.” Presuppositions about risk, criminality, and fundamental difference of the brains of incarcerated and non-incarcerated individuals poise the study to “discover” the brain in particular ways — ways that are themselves circumscribed by carceral state logic. “Risk” or “enhancement of public safety” must be critically questioned: risk to whom? Machine-centric “neuroprediction” of recidivism presage a crucial epistemic shift with ontological implications.

Assuming that machine-centric modes of knowing and determining such as neuroprediction are superior or inherently more accurate than other epistemic methods casts human-centric methods as mere proxies, implying that neuroimaging has the capacity to unveil a determined, metaphysical Truth about an individual’s propensity for criminality. The implication is that using neuroimaging data plugged into A.I. risk algorithms, machines could know or accurately predict how human behavior before we (the behaving humans) know.

Are we playing God?

Iconography & Holy Texts

In neuroprediction, humans seek to exceed humanity via machines. Such logics presuppose pre-destined answers, and in grasping for the Good and the True, this thinking turns eyes away from coproductive analytics that situate us both as makers and made, creators and created. Imagining these technologies as made in our image, rather than free from human imprint, we might better address their potential perils, particularly of eugenics and phrenology potentially lurking amidst present “computational psychiatry.”

Where Do We Go from Here?

Envisioning Opacity & Future Otherworlds

Logic undergirding neuroprediction in carceral contexts presupposes that human fallibility is a fundamental flaw in need of non-human corrective — in need of objective Truth. This line of thinking assumes shifting to a machine-centric mode of knowing would remedy fallibility altogether. Are mind-reading machines part of the solution?

Opacity constitutes a fundamental tenet of human-centric knowing — we do not presume to be able to read one another’s thoughts. Rather, we conjecture intentionality based on behavioral patterns. Machine-centric neuroimaging presumes humans to be knowable and known in an objective, rather than empirical sense. However, I disagree that objectivity is a necessary (or even desirable) metric for negotiating morals and evaluating behavior.

In Poetics of Relation, Édouard Glissant eschews the right to difference in favor of what he calls the “right to opacity.” He writes, “the theory of difference…has allowed us to struggle against the reductive thought produced, in genetics, for example, by the presumption of racial excellence or superiority…But difference itself can still contrive to reduce things to the Transparent.” Difference, he argues, is predicated upon the following subject-other dynamic: “I admit you to existence, within my system. I create you afresh.” Opacity, however, provides a more liberatory starting point, as it does not require that one be “grasped” and brought close to another’s oculus for descrying or examination — thus it requires no reduction. He concludes:

Opacity is…the force that drives every community: the thing that would bring us together forever and make us permanently distinctive. Widespread consent to specific opacities is the most straightforward equivalent of non-barbarism. We clamor for the right to opacity for everyone.

In the face of mechanized attempts to reduce human beings and our consciousnesses to lucidity, clarity, legibility, and coherence for the sake of judgement, a right to opacity seems to be a worthy guiding tenet. Perhaps, as Glissant suggests, democracy is contingent upon, rather than threatened by each of our multiple opacities. Let’s not sacrifice that most visible invisibility to non-human minds and technovision’s creative capacities to reveal the world in distinctly inhuman (un)truthful ways.

References:

Alexander, Michelle. 2020. The New Jim Crow. Tenth anniversary edition. La Vergne: The New

Press.

Aharoni, Eyal, et al. “Neuroprediction of Future Rearrest.” Proceedings of the National

Academy of Sciences – PNAS, vol. 110, no. 15, National Academy of Sciences, 2013, pp. 6223–28, https://doi.org/10.1073/pnas.1219302110.

Aharoni, Eyal, et al. “Predictive Accuracy in the Neuroprediction of Rearrest.” Social

Neuroscience, vol. 9, no. 4, Routledge, 2014, pp. 332–36, https://doi.org/10.1080/17470919.2014.907201.

Buck v. Bell, 274 US 200 – Supreme Court 1927.

Calderón, Andrew Rodriguez. “A Dangerous Brain.” The Marshall Project, August 15, 2018.

https://www.themarshallproject.org/2018/08/14/a-dangerous-brain.

Foucault, Michel. 1988. The History of Sexuality. Vol 1. “Biopower.” 1st Vintage Books ed. New

York: Vintage Books. Pg. 135-145.

Fanon, Frantz, Richard Philcox, Jean-Paul Sartre, Homi K. Bhabha, and EBSCOhost. 2004. The

Wretched of the Earth. New York: Grove Press.

Glenn, Andrea L, and Adrian Raine. 2014. “Neurocriminology: Implications for the Punishment,

Prediction and Prevention of Criminal Behaviour.” Nature Reviews. Neuroscience 15 (1): 54–63. https://doi.org/10.1038/nrn3640.

Glissant, Édouard, and Betsy Wing. 1997. Poetics of Relation. “For Opacity.” Ann Arbor:

University of Michigan Press. Pg. 189-194.

Gray, Lindsay. “What Does a Guilty Brain Look Like?” Scientific American, November 18,

- https://www.scientificamerican.com/article/what-does-a-guilty-brain-look-like/.

Hacking, Ian. 1999. The Social Construction of What? Cambridge, Mass: Harvard University

Press.

Haraway, Donna. 1985. “The Cyborg Manifesto,” in Philosophy of Technology: The

Technological Condition, An Anthology, ed. Val Dusek and Robert C. Sharff, Second (Oxford: John Wiley & Sons, Inc, 2014), 610-630.

Harvard Library. “Scientific Racism.” Image. Accessed May 2, 2022. https://library.harvard.edu

confronting-anti-black-racism/scientific-racism.

Jasanoff, Sheila. 2005. Designs on Nature: Science and Democracy in Europe and the United

States. Course Book. Princeton, N.J.: Princeton University Press.

Jasanoff, Sheila. 2011. Reframing Rights: Bioconstitutionalism in the Genetic Age. Cambridge,

MA: MIT Press.

Jasanoff, Sheila. 2019. Can Science Make Sense of Life? Cambridge, UK; Medford, MA: Polity

Press.

“MAGNETOM Avanto Fit 1.5T Eco – Used MRI Machine.” Image. Siemens Healthineers.

Accessed May 1, 2022. https://www.siemens-healthineers.com/en-us/refurbished-systems-medical-imaging-and-therapy/ecoline-refurbished-systems/magnetic-resoncance-imaging-ecoline/magnetom-avanto-fit-eco.

Metzl, Jonathan. 2009. The Protest Psychosis: How Schizophrenia Became a Black Disease.

Boston: Beacon Press.

Kiehl, Kent A., et al. “Age of Gray Matters: Neuroprediction of Recidivism.” NeuroImage

Clinical, vol. 19, Elsevier Inc, 2018, pp. 813–23, https://doi.org/10.1016/j.nicl.2018.05.036.

Kinzer, Stephen. 2019. Poisoner in Chief: Sidney Gottlieb and the CIA Search for Mind Control.

First edition. New York: Henry Holt and Company.

Rose, Nikolas S. 2007. Politics of Life Itself: Biomedicine, Power, and Subjectivity in the

Twenty-First Century. “Biological Citizens.” Princeton: Princeton University Press. Pg. 130-154.

The Sentencing Project. “Capitalizing on Mass Incarceration: U.S. Growth in Private Prisons.”

Accessed May 2, 2022. https://www.sentencingproject.org/publications/capitalizing-on-mass-incarceration-u-s-growth-in-private-prisons/.

The Sentencing Project. “Report to the United Nations on Racial Disparities in the U.S. Criminal

Justice System,” April 19, 2018. https://www.sentencingproject.org/publications/un-report-on-racial-disparities/.

Skinner v. Oklahoma ex rel. Williamson, 316 US 535 – Supreme Court 1942.

Steele, Vaughn R., et al. “Multimodal Imaging Measures Predict Rearrest.” Frontiers in Human

Neuroscience, vol. 9, Frontiers Research Foundation, 2015, pp. 425–425, https://doi.org/10.3389/fnhum.2015.00425.

Tortora, Leda, et al. “Neuroprediction and A.I. in Forensic Psychiatry and Criminal Justice: A

Neurolaw Perspective.” Frontiers in Psychology, vol. 11, FRONTIERS MEDIA SA, 2020, pp. 220–220, https://doi.org/10.3389/fpsyg.2020.00220.

U.S. Const. amend. XIII. §1.

U.S. Const. amend. XIV. §1.

U.S. Department of Labor. “State Minimum Wage Rate for New Mexico.” FRED, Federal

Reserve Bank of St. Louis, January 1, 1968. https://fred.stlouisfed.org/series/STTMINWGNM.

White, Martha C. “‘This Is Your Brain on Drugs,’ Tweaked for Today’s Parents.” The New York

Times, August 7, 2016, sec. Business. https://www.nytimes.com/2016/08/08/business/media/this-is-your-brain-on-drugs-tweaked-for-todays-parents.html.

Sheila Jasanoff, “Can Science Make Sense of Life?” Pg. 7: “Representing the human genome as the book of life, written in the plain four-letter code of DNA, implicitly claims for biologists a priestly role: as the sole authorized readers of that book, those most qualified to interpret its mysteries and draw out its lessons for the human future.”

This articles’s analysis departs from the United States-based study “Neuroprediction of Future Rearrest,” (Aharoni et al., 2013) and expands upon critiques of this and similar studies laid out in the 2020 article, “Neuroprediction and A.I. in Forensic Psychiatry and Criminal Justice: A Neurolaw Perspective.” (Tortora et al., 2020).

The neuroimaging portion of this study focuses on the anterior cingulate cortex (ACC), a brain region purportedly “associated with error processing, conflict monitoring, response section, and avoidance learning” (Aharoni et al., 6223). Disturbingly, the study also describes the ACC as “the anatomical region associated with rearrest” (Aharoni et al., 6223).

Aharoni et al., 2013. Pg. 6224.

Participants were randomly presented with either the letter “X” (appearing more frequently) or the letter “K” (appearing less frequently). They were told to press a button with their pointer finger whenever they saw an “X,” but refrain from pressing the button whenever a “K” appeared. The study asserts that “successful performance on this task requires the ability to monitor error-related conflicts and to selectively inhibit the prepotent go response on cue,” which establishes a syllogism between poor performance on this task and “impulsivity.” The assumption chain of purported correlation is as follows — poor performance on the go/no-go —> extrapolated to indicate poor behavior regulation in general —> extrapolated to indicate increased predisposition to “antisocial behavior” —> extrapolated to suggest higher individual propensity for “crime.” Realistically, how much can be extrapolated from one’s ability to accurately press a button corresponding with a letter on a screen? Is it moral to pre-determine a person’s guilt based on the images of blood flow in one area of the brain during such a trivial task?

Aharoni et al., 2013. Pg. 6223. Italics my own.

Given this study’s situatedness in the United States’ carceral landscape and the potential profitability of neuropredictive technologies, the U.S. Constitution’s Thirteenth amendment must not be ignored. The amendment reads: “Neither slavery nor involuntary servitude, except as a punishment for crime whereof the party shall have been duly convicted, shall exist within the United States, or any place subject to their jurisdiction” (U.S. Const. amend. XIII. §1). Legal scholar, Michelle Alexander writes in The New Jim Crow: Mass Incarceration in the Age of Colorblindness, “The Thirteenth Amendment to the U.S. Constitution had abolished slavery but allowed one major exception: slavery remained appropriate as punishment for a crime.” (Alexander, 39.) Alexander goes on to quote Virginia Supreme Court Case Ruffin v. Commonwealth to claim that “the court put to rest any notion that convicts were legally distinguishable from slaves.” (Alexander, 39). The text she cites from that case reads: “For a time, during his service in the penitentiary, he is in a state of penal servitude to the State. He has, as a consequence of his crime, not only forfeited his liberty, but all his personal rights except those which the law in its humanity accords to him. He is for the time being a slave of the State. He is civiliter mortus; and his estate, if he has any, is administered like that of a dead man.” (Alexander, 39). Emphasis my own. American chattel slavery was an economic system, and enslavement is still permitted in this “free” country, therefore incarceration must be profitable for some, and sale of technologies claiming to possess “neuropredictive” powers may yet prove to be quite lucrative.

The Sentencing Project, “Report to the United Nations on Racial Disparities in the U.S. Criminal Justice System.” See also, Alexander, Michelle. The New Jim Crow. “1: The Rebirth of Caste,” in which Alexander argues that mass incarceration perpetuates what Michelle Alexander dubs a racialized “caste system” that began with chattel slavery, morphed into Jim Crow, and continues on with today’s prison industrial complex.

The neuroimaging portion of this study focuses on the anterior cingulate cortex (ACC), a brain region purportedly “associated with error processing, conflict monitoring, response section, and avoidance learning” (Aharoni et al., 2013. Pg. 6223). Disturbingly, the study also describes the ACC as “the anatomical region associated with rearrest” (Aharoni et al., 2013. Pg. 6224). Study organizers justify zeroing in on this brain area specifically because of animal lesion studies that claim to establish a causal relationship between ACC damage and difficulty regulating behavior (Aharoni et al., 2013. Pg. 6223). Though the jump from animal brains to human brains may have established precedent in neuroscience research, it gives me pause as a non-scientist. Why is there such seamlessness in comparing animal and “offender” i.e. “criminal” brains? Methodology for assessing “behavior regulation” in the cited animal studies is not specified, further calling into question why that research is assumed considered to be a valid precedent for and parallel to the human subject research under interrogation here. The need to situate the Aharoni et al. study in the explicitly racial dimensions of mass incarceration in the United States becomes especially clear, because only with that crucial historical and contemporary context can we appropriately caution against such leaps to associate the human “criminal” with animal. The legacy of scientific racism and white supremacy does not reside exclusively in the past.

Haraway, Donna. “A Cyborg Manifesto.”

Narratively weaving together correlation between cognitive control and “antisocial behavior” with brain-scan images and statistics, the study claims that: This pattern supports the view that neurocognitive endophenotypes carry the potential to characterize underlying traits and defects independently of behavioral phenotypes, such as self-report instrumentals and expert-rater diagnoses based on client interviews and collateral historical information. Finally, this work highlights potential neuronal systems that could be targeted for treatment intervention (Aharoni et al., 2013. 6223. Italics my own).